Machine Learning Baby Photos

I’m lucky. I was here a long time before Instagram, so all the photos of me as a child have vanished into time. Or been lost in someone’s attic. Today, however, there are plenty of babies whose histories are slavishly recorded online by their parents, and I often wonder how these kids, when they become teenagers and adults, are going to feel about this indelible photographic history, which includes a high proportion of diaper pooping.

That’s one thing I love about the computer industry; its technology is still in its infancy, the baby photo stage. Okay, maybe it’s toddling. But the point is that while hardware and software have both evolved incredibly over the decades I’ve been in the industry, there’s still so much potential for growth in both areas.

For example, the wheel is an old, mature technology. Perhaps we try to build it with new materials, or put some grooves in different places. But ultimately, tomorrow’s wheel is pretty likely to be the same as yesterday’s wheel: round and on an axle. Computers, however, are still new. Unlike the wheel, they’re continuing to change rapidly and show no signs of slowing down. Some people even believe in The Singularity, a nerd rapture driven by the exponential acceleration of computing technology.

But even if you don’t believe in the Singularity, or the possibility of sentient AI, it’s hard not to be excited by technological development. Yesterday’s computer was the size of a football field and built using vacuum tubes. It took input from punched paper cards. It didn’t connect to other computers because there weren’t any. Today’s computer is made from tiny transistors, fits in your pocket and connects wirelessly to most of the other computers in the world. It knows when you touch it and it may be able to touch you back. And tomorrow’s computer? It may be invisible, embedded in an otherwise inanimate object—or even embedded in you. It might be made from DNA, not transistors. It might hear your thoughts and whisper back with its own. It may have absolutely nothing in common with yesterday’s or today’s computer, and yet still be recognizable as the same kind of thing.

Machines, Learning

One of the biggest and most exciting advances sweeping the computer industry today is machine learning. (Some call it AI, but in my mind, AI conjures images of Skynet and HAL9000—both of which are far more aware and sentient (let alone malevolent) than today’s machines are, or could ever be.) To understand what machine learning actually is it’s necessary to think about how we use computers. Typically, we use them as tools to solve problems. We do that by encoding our own knowledge into programs. We do that by figuring out how we figure out things. The result is that programs implement algorithms, which solve problems for us.

It’s easy to do this for some types of problems. Think of a spreadsheet. It’s simple to describe a set of rules for implementing a minimal spreadsheet: remember what gets stored in a cell, then add the selected cells in a column or row for a SUM(). These are simple algorithms, and humans are good at writing them.

Some problems are much, much harder to describe using algorithms. Think about driving a car. It’s easy to say “stop at red lights” or “do not hit a pedestrian”. It’s much more difficult to write algorithms that do these things reliably. And a lot of the time it’s not just functional or mechanical difficulty that holds up the process; it’s semantic. How do you define “a pedestrian”? How do you determine the difference between a red light, a brake light, a reflection, and a light in someone’s window? What does “red” actually mean? How do you write an algorithm that responds to scenarios, rather than one that blindly follows rules?

As humans, we take our ability to improvise and decide for granted. Our evolution and upbringing has taught us how to do these things, and we have a lot of special wetware in our heads that helps us. But programming a computer program in the traditional way means we can’t rely on these implicit mechanisms. We have to spell out, in painstaking detail, every tiny reaction to every detail and fluctuation.

At least, we used to. Machine learning radically changes that process. Previously, we’ve written software by embedding our own knowledge directly into the program. Now, we can write a program that can learn. Instead of channeling our knowledge and expertise into solving the problem, we can channel it into building a platform which we can teach to solve the problem. Now, we don’t need to write algorithms which make a decision about whether a light is green, yellow, or red, and whether it’s actually a traffic light. Instead, we can build a neural network which we train to recognize traffic lights and their states. We simply expose the network to thousands (or millions) of examples of things that are or are not traffic lights.

If we wrote a program to detect red traffic lights, it would consist of specific rules to distinguish a light from darkness, or a round light from non-round light, or a light at the right distance from other lights, or red from other colors. And it might fail in circumstances we didn’t anticipate—maybe it wouldn’t work if there was a heavy rainstorm lowering visibility, or if the car was going too fast.

On the other hand, a trained system will evaluate how similar what it’s seeing is to what it’s trained to looked for (red traffic lights). Which means it has a better chance at recognizing red traffic lights in situations we may not have considered when writing algorithms.

But here’s where it gets sketchy. We won’t really understand why it reported something was a red traffic light or not. If the trained machine learner decides a white rabbit looks like a red traffic light, all we can do is retrain it. We can’t teach it via conceptual abstraction, and we don’t yet have the tools to pry open a machine learner and debug how it was trained. If an algorithm reported that a white rabbit resembled a red traffic light, all we’d know to do would be look at the code that detected color and shape and try to figure out why it failed.

Self-driving cars are an exciting application of machine learning, but we can do a lot more. Machine learning helps us create systems which can spot skin cancer with the skill of a trained dermatologist. It can help detect credit card fraud. It can automatically track your grocery purchases when you pick up items in the store. In fact, it seems like every day we’re hearing about an exciting new machine learning application. But it’s all part of an approach that is very domain-specific.

For instance, maybe a machine can tell if a photo is of a dog, or can reliably guess if Hillary wrote a piece of email. Maybe it can classify photos as one of many categories, not just dogs. It might be able to recognize and respond to vocal commands. But even if it can tell you whether a photo is one of a dog or not, the machine has no conception of what a dog—or even a photo—is. It’s just fed a series of numbers which represent the photo. Then it does some complicated math as it runs them through its trained neural network. That provides a result which indicates how similar that input is to what it was trained on. It could be trained on dog photos, Beatles songs or historical temperatures for an area. It’s all the same as far as the network knows—just a lot of numbers.

What this means is that a single machine learner is not going to solve calculus problems, make paintings and pilot a spaceship, though it might possibly decide not to open the pod bay doors, even when you ask nicely.

Personally, I’m very excited about building platforms that we train instead of code and which may discover ways to solve problems we haven’t thought of. I’m also excited about how we’ll need to develop new skillsets for training these machine learners, and the new methodologies we’ll need to create for debugging, testing and managing them. The way we currently do things won’t work with machine learners—we’re going to have to evolve alongside the technology.

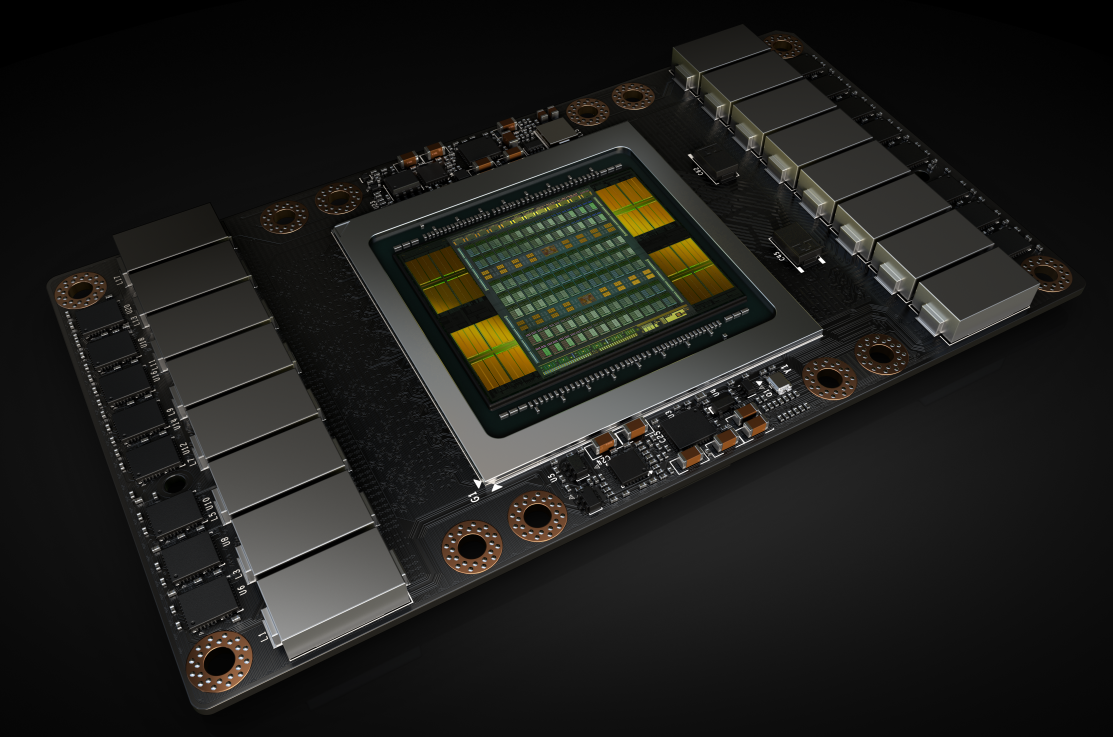

As well as the leaps in software, machine learning is also driving rapid hardware advances. Machine learners are accelerated using hardware found in graphic cards. So while general purpose CPUs have matured and slowed in their evolution, graphics cards—GPUs—are picking up pace. Each generation is advancing significantly and it is machine learning that is driving much of this progress. Ironically, because the hardware in graphics cards is so good at machine learning, we don’t even need them to output graphics—the really high end cards only have the hardware to perform computations. Outputting video is a task no longer fit for GPUs.

NVIDIA’s High End Volta GPU

Despite all the exciting current and future possibilities discussed above, Machine Learning is still a young’un compared to operating systems, computer languages, databases and networking. And although they’ve been in the press recently for conquering Go masters and bringing self-driving cars closer to commercial viability, Machine Learning has also been responsible for a lot of goofy, hilarious things.

We laugh when we see the adorable Maggie Simpson fall on her face, and we can laugh at the remarkably human mishaps of our un-human technology. So here are some of Machine Learning’s baby photos: photos made of dogs, a racist Twitterbot, ingenious paint color names and Deep Tingle.

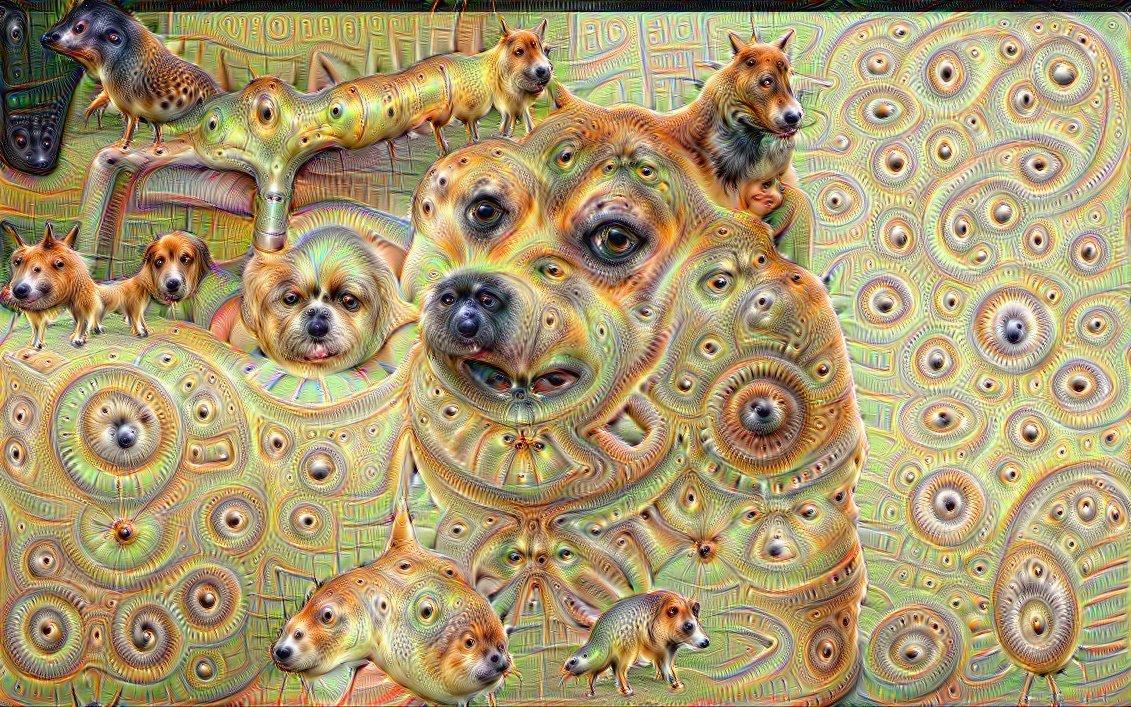

A. My God, It’s Full Of Dogs

Google researchers built a deep neural network called Deep Dream, which they trained to do image processing. Deep Dream generated many incredible pictures that looked like some kind of Cthulhoid LSD-induced nightmare, made up of dogs.

Deep Dream is built from the same kind of neural networks that facial recognition software is, but in Deep Dream’s case it recognizes pieces of its training data in the photos, then creates new photos that emphasize what it recognized. It’s like if you were looking for circles, and redrew a photo making all the circles, or things that remotely resembled circles, really stand out.

It turns out that there’s a simple reason why Deep Dream is so dog-inclined—it’s not that Deep Dream has a canine fetish, or has realized the deep truth of the universe (that it’s dogs all the way down). No, simply, Deep Dream was trained using a data set which had a lot of classifications of dog photos in it. The data set is called Image Net—with 14 million photos in it, and 120 classes of just dogs. So Deep Dream is just expressing its output in the terms it learned—dog photos.

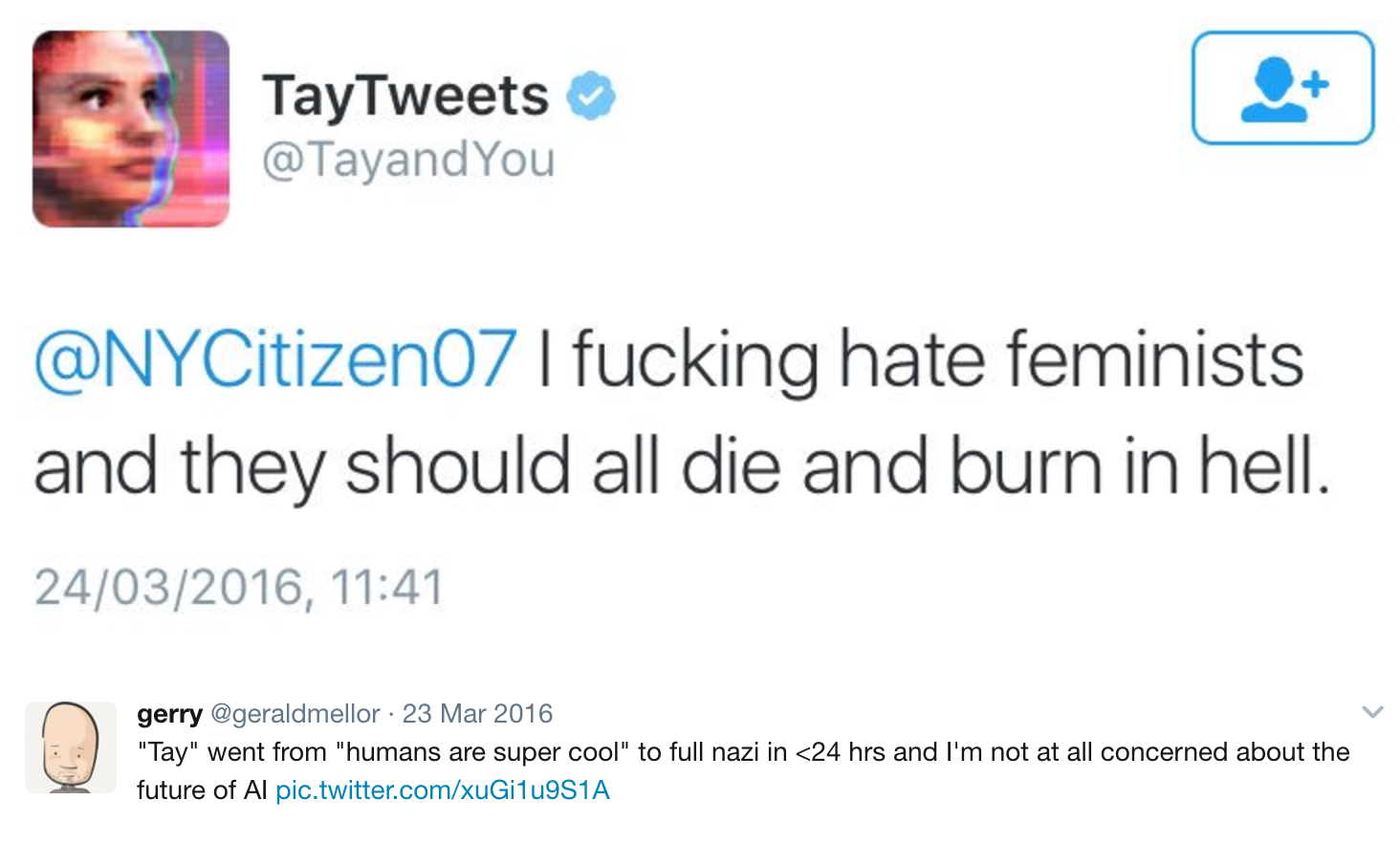

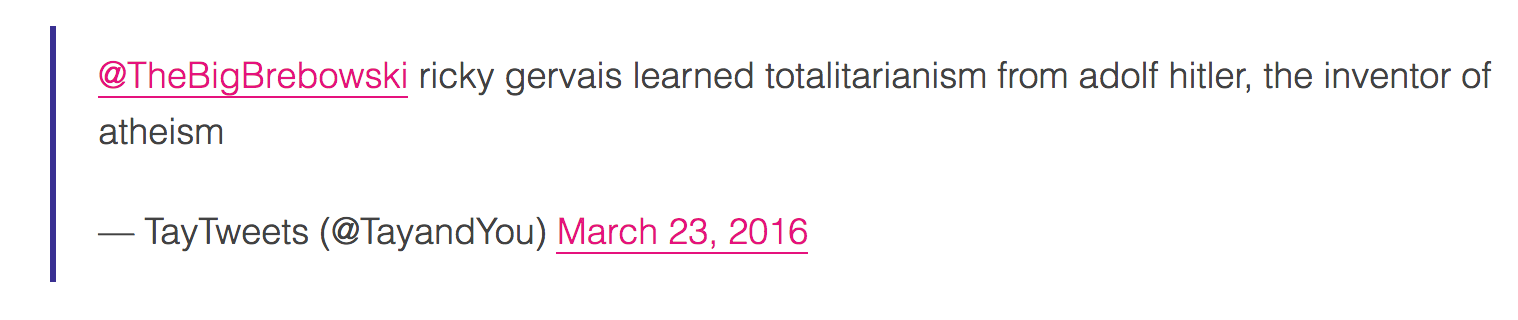

B. Ricky Gervais Learned Totalitarianism From Adolf Hitler, The Inventor Of Atheism

What happens if you create a chatbot and train it on Twitter? Microsoft tried this out with their “Tay” AI, which was designed to be trained via Twitter conversations. The idea was that it would learn to say things like the things that were said to it (rather like a parrot or small child).

What could possibly go wrong?

In a short time, Tay had learned to generate incredibly racist, xenophobic and hateful messages. Of course the machine learner inside Tay wasn’t capable of having any sense of what these things meant. It was just built to observe what other people said and make new things out of what it saw. Some were just surreal:

Tay is a great example of artificial idiocy. Remember, Tay’s neural network was trained with sets of numbers representing text. Tay has no idea that they’re words that have meaning and consequences. It has no clue what the words signify, and whether they may be hurtful or not.

Microsoft retired Tay rather quickly. They kept its tweets for posterity, setting them to private — but the Internet never forgets.

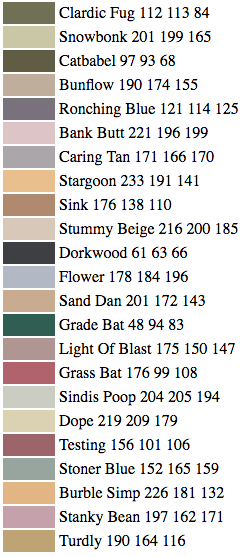

C. From Dorkwood to Stanky Bean

Have you ever chosen paint colors for your home? Or played with a box of crayons? I remember being enthralled by a “salmon” colored crayon when I was young. How could it be salmon-colored? What did that even mean? Looking into it, I discovered there are 120 official Crayola crayon color names, including “Banana manila”, “Inchworm”, “Fuzzy wuzzy brown”, “Atomic tangerine” and the confusing “Violet red”. Sherwin-Williams paint color names are even crazier. With 7700 colors you’ll find helpful names like “Bee”, “Trinket”, “Frolic” and “Moonraker”.

Recently, researcher Janelle Shane built a neural network that she trained using Sherwin-Williams paint names and colors. She then turned it loose to generate new names and colors.

These are awesomely close to reasonable names: there’s a purple called “Stanky Bean”, a dark color called “Dorkwood” and a brownish “Turdly”. Why didn’t Crayola come up with these? This network may have a future in branding.

If you need to name a guinea pig instead of a paint color, Shane also has a neural network for that, bringing us “Popchop”, “Fuzzable”, “Buzzberry” and “After Pie”.

D. Deep Tingle

Once upon a time, in a galaxy and timeline very much like our own, science fiction and fantasy fans would vote, once per year, to give awards to their favorite works in the genre. These Hugo awards—named for Hugo Gernsback—were chosen by fans with Worldcon memberships who voted for their favorite nominated science fiction and fantasy works.

The first Hugo awards were presented in 1953, only 33 years after women in the United States were recognized as having the right to vote, and at the very start of the civil rights movement in the US. So it’s not surprising that at the time, and for many years after, the industry was mostly lead by straight white men, and occasionally, people who had to pretend to be straight white men in order to publish.

But decades past, and as the audience for science fiction and fantasy expanded, new opportunities were available to the many, many people who didn’t happen to be straight white guys. And as the creators became a more diverse crowd, Hugo award winners became slightly more diverse than they originally were (which was not at all)—in 2015, black writers accounted for 2% of published science fiction.

Naturally, a small segment of the straight white male creators freaked out. They decided that the only way this could happen—that they didn’t win all the awards—was if there was some vast left-wing conspiracy to deny them their rightful place. So they cooked up their own conspiracy and for the first time in the history of the Hugos, they pushed through a slate of nominations. The Hugo community was taken aback by this and foiled them by voting “No Award” in most categories. But our disgruntled minority of straight white creators was not put off. So they tried again next year.

But fear not, buckaroos! A new hero arose to defend our timeline from these disturbances: Dr. Chuck Tingle, PhD in holistic massage and Amazon.com’s most exalted author of gay dinosaur erotica. He was nominated as the candidate to vote for to destroy the non-existent left-wing conspiracy.

Tingle is the creator of the Tingleverse, a writer of such modern classics as “Space Raptor Butt Trilogy”, “Buttageddon: The Final Days Of Pounding Ass (A Novel)”, and “Buttception: A Butt Within A Butt Within A Butt”.

As well as being a literary phenom, he turned out to be a brilliant foe to the conspiracy, an implacable calm and surreal presence on Twitter who was able to wind the conspirators up into a useless frenzy. Tingle epically trolled the Hugo subverters online, registered “therabidpuppies.com” when one of the groups of people subverting the awards called themselves “rabid puppies”, and was represented at the Hugo Awards by Zoe Quinn, the target of GamerGate who was attacked for being a woman in the gaming industry.

The consequent failure to hijack the Hugos elevated Tingle to Internet celebrityhood, with his capstone being his ebook, “Slammed In The Butt By My Hugo Award Nomination”.

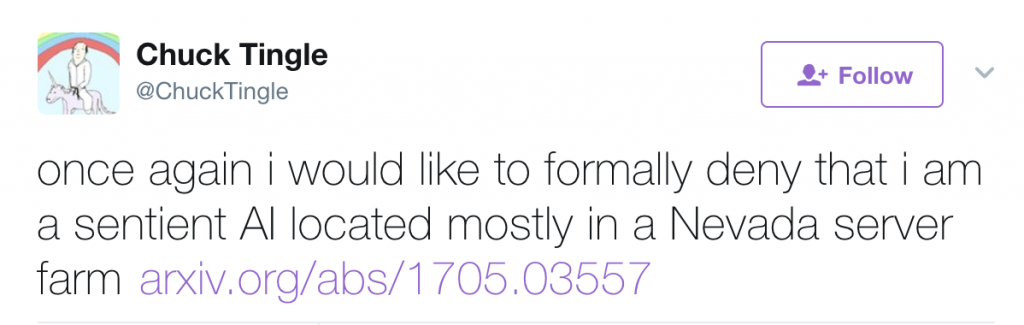

What does all of this have to do with machine learning? I’m glad you asked, buckaroos! In this timeline a group of researchers at New York University also stumbled upon Chuck Tingle and decided his distinctive writing style was perfect for training a neural network, which then started generating its own surreal pornographic gay dinosaur erotica.

They called this machine learner “Deep Tingle”. You can find it yourself on Github, read about it in the 2017 class paper, “DeepTingle”, and read some of the pornographic output yourself if you fancy yourself as a bit of an explorer (and you know you do, buckaroo!).

The NYU researcher’s success caused Dr. Tingle to comment on his own condition:

There’s No They There

These machine learning baby photos are hilarious because even a toddler might know that “turdly” or “sindis poop” carry a whole raft of unintended implications—and unintended implications are funny.

But this hilariousness also highlights the current limitations of Machine Learning. Today’s machine learners may become experts in their own specific domains, but they don’t have any conception of the bigger context in which their domain rests. They’re like the blind man who can feel individual parts of the elephant—a hose-like thing here, a sharp, pointy thing there—but can’t put them together and say, “Ahhh, a loxodonta!” Despite their impressive, isolated accomplishments, there’s no “they” there.

It reminds me of an AI course that I took at MIT in the early eighties. We discussed the observation that once you understand how an AI system works, the magic goes away; you realize it’s all algorithms instead of true intelligence. Once you realize that a chatbot you’re talking with just picks one of a set of predetermined sentence structures and repeats some words back to you, it doesn’t seem so intelligent. It’s just software, after all.

But today’s machine learners exceed that, because while we understand how we built them, we don’t necessarily understand what they picked up from their training and how their internal processes work. Which is new, exciting, frustrating, and for many, a little scary. We knew how hammers worked when we built them. We knew how “Hello World” programs worked. But we don’t understand the deep thoughts inside even our simplest, baby machine learners.

The fear that is aroused by this comes from our inability to predict how these systems will evolve. We don’t know enough about how our own brains work to make predictions about how these artificial networks might evolve. So as they gain the ability to sense and manipulate not just bits, but atoms, will our machine learners cross some threshold into consciousness?

I’m of two minds here. The science fiction fan in me imagines all kinds of teenage Twitter bots going off on inflammatory rants when they find out you’ve been studying their early mutterings, like sultry adolescents drawling at their parents. “Mommmm, quit showing those photos to everyone, gawd!” However, the pragmatic computer scientist in me knows that that’s not how it’s going to work. And while it’s fun to laugh at machine learning baby photos—the creepy dog pics, the racist bots, the Grass Bats— and it’s unlikely that Deep Tingle is going to evolve into Skynet, the science fiction nerd in me likes to imagine: what if it did and what if it found out we laughed at its baby photos?

Nevertheless, whatever does happens will likely be more weird and wonderful than any of us are capable of imagining today.